Impressive, very nice…

OpenAI had to do 3 things to accomplish this:

spend half a million dollars on electricity alone through 34 days of training

fine tune [lobotomize :(]

create a beautiful UI so that people can do inference

Right now only big actors have the budget to do the first at scale, and are secretive about doing the second one. Many people are working on the third, even sometimes on the second. There are sometimes small attempts at the first!

BEWARE!

MANY PEOPLE SEE OPEN SOURCE AI AS THE DEVIL, THE PANDORA’S BOX, LEADING TO HUMANITY’S DEMISE. PROCEED WITH CAUTION

Pre-trained models

I’ll look through these here. These are mostly pre-trained models and links are to their github repos unless specified otherwise

pretrained mini BERT at huggingface

one of the first multimodal models, small (up to 1.18B parameters) Gato of Deepmind at Wikipedia

Open source imitation of Gato

GLM-130B - bilingual (English-Chinese) model

Llama - well-known model from Meta

Llama C++ - port of the Llama library to C++

GPT-J

Open source competitor to GPT, GPT-J. It’s a brainchild of EleutherAI, a research group that arose in 2020 over a discord server. See

Pygmalion.ai

This project is focused around a small, romantic-relationship-oriented character model with 6B on huggingface. Here’s their FAQ. They have their own matrix chatroom.

RWKV-LM

RNN with LLM level performance, attention free. Quite unique technologically.

The person behind this is a powerhouse, with good support:

You are welcome to join the RWKV discord https://discord.gg/bDSBUMeFpc to build upon it. We have plenty of potential compute (A100 40Gs) now (thanks to Stability and EleutherAI), so if you have interesting ideas I can run them.

(excerpt from github readme).

Loaders

These let you run a pre-trained LLM with lower technical spec. Don’t ask me how that works.

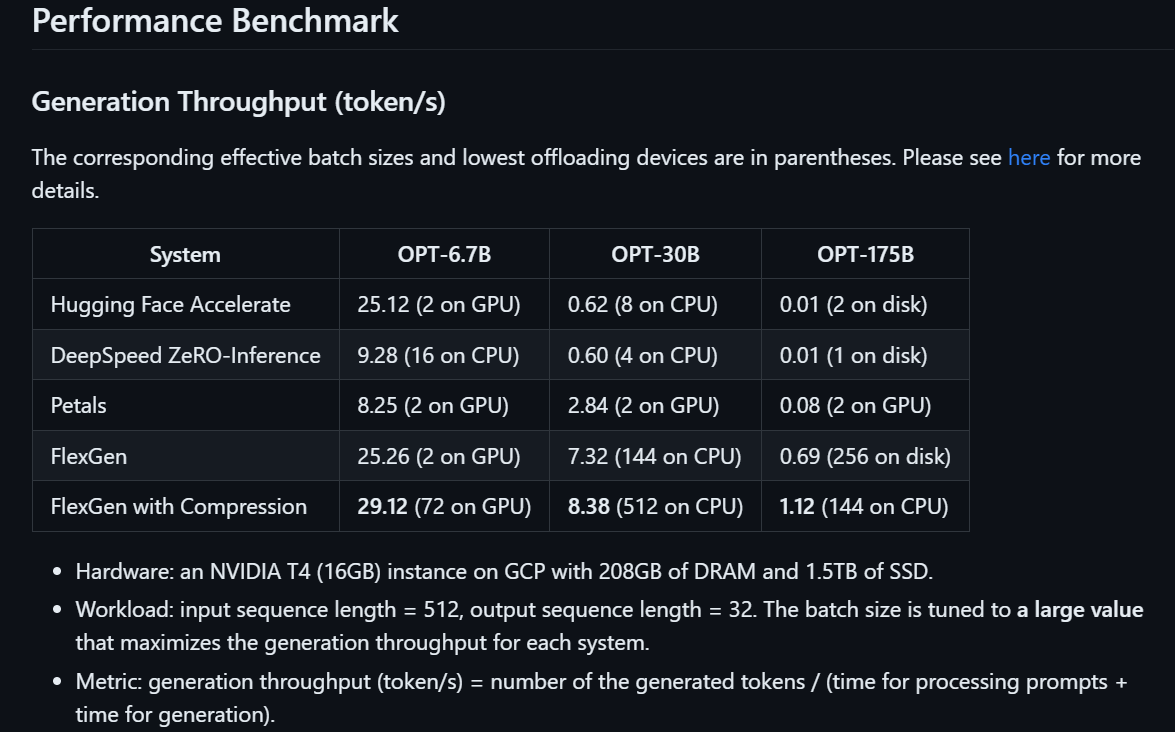

FlexGen

Open source FlexGen specializes in running preloaded models on weak GPUs. They boast a great performance benchmark table there:

Huggingface Accelerate

A very broad project, read more about it on huggingface wiki.

ZeRO Inference

Inference focused loader from DeepSpeed AI. They boast smooth scaling up to 1000s of GPUs. They talk a bit about distributed running, but it seems only in the context of many A100 units on a server farm, not worldwide.

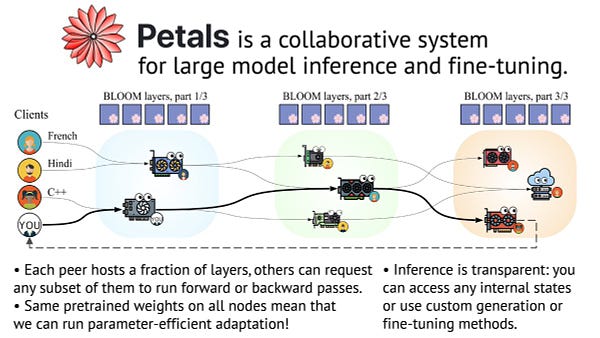

Petals

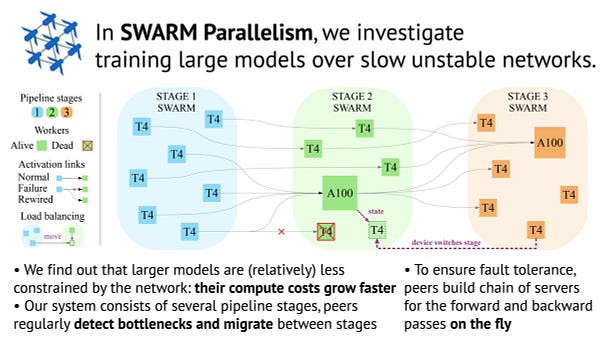

Petals provides fine-tuning options through SWARM architecture.

Know more about their memetics through their twitter.

And grok their tech on the SWARM repo github, and the main PETALS repo.

One thing you might notice - there's 15 contributors, most of them Russian

Max Ryabinin, author of the tweets above, is actually a PhD student at Higher School of Economics in Moscow.

This is the project the USG does not want you to know about!

Let's evaluate the Petals project - poor bus factor due to a localized core team and small community to fall back on. We cannot rule out backdoor institutional support from the Russian government. If they didn't it would be a mistake.

Sparsity as competing approach

First paper on the topic of sparsity seems to be Frantar&Alistarh 2023, where over 100B out of 175B parameters are ignored, maintaining decent performance. That is quite promising, but sparsity works only once the training is done.

Tech-wise the small models and loaders are great, but limited - to compete with OpenAI decentralized training is necessary.

These are all brave attempts, but we need more…

Follow these accounts on Twitter!

Links to other parts:

rats and eaccs 1

1.1 https://doxometrist.substack.com/p/tpot-hermeticism-or-a-pagan-guide

1.2 https://doxometrist.substack.com/p/scenarios-of-the-near-future

making it 22.1 https://doxometrist.substack.com/p/tech-stack-for-anarchist-ai

2.2 https://doxometrist.substack.com/p/hiding-agi-from-the-regime

2.3 https://doxometrist.substack.com/p/the-unholy-seduction-of-open-source

2.4 https://doxometrist.substack.com/p/making-anarchist-llm

AI POV 3

3.1 https://doxometrist.substack.com/p/part-51-human-desires-why-cev-coherent

3.2 https://doxometrist.substack.com/p/you-wont-believe-these-9-dimensions

4 (techo)animist trends

4.1 https://doxometrist.substack.com/p/riding-the-re-enchantment-wave-animism

4.2 https://doxometrist.substack.com/p/part-7-tpot-is-technoanimist

5 pagan/acc https://doxometrist.substack.com/p/pagan/acc-manifesto