Pagan / Acc Chapter 3.1

You Won't Believe These 9 Dimensions to Problems in Life, Work and AI alignment- Number 8 Will Shock You!

When you ask Bing for problem dimensions it'll give you something like this:

Time: How long does it take to solve the problem?

Cost: How much money or resources are needed to solve the problem?

Quality: How good is the solution to the problem?

Scope: How big or small is the problem?

Stakeholders: Who are the people affected by the problem or the solution?

Risks: What are the potential negative consequences of the problem or the solution?

Ethics: How does the problem or the solution align with your values and principles?

Emotions: How do you feel about the problem or the solution?

These are quite well-applicable to many cases. Yet these are not quite general enough. What about broad-scoped problems of science? How is paleontology different from studies conducted at 'body farms'? How is evolutionary psychology different from the study of visual illusions? How is cosmology different from particle physics?

Only the latter of each pair are real sciences - at least according to Thomas Hobbes. Now all 6 can be physicalist, but not all can be empiricist. Cosmology has nearly 0 immediately testable predictions.

The goal here is to examine various types of inquiries problems and outline - different scenarios for AGI development. That is the first step towards systemizing the discussion of these problems. Systemization is necessary to get a conceptual toolset to get balanced and nuanced conversation beyond the doomerism of the Yuddite school and unrestrained optimism of e/acc.

E/acc manifesto thermodynamical arguments are far from water-tight, unlike their anti-authoritarian ones.

I spoke about the need for this before.

https://twitter.com/doxometrist/status/1643988279266795521

This post is about outlining classes of problems. Very abstract and broad, we need to examine game theory, philosophy of science, reinforcement learning and Skinner boxes. We will get some n dimensions we can categorize problems into, creating 2^n classes of problems assuming all dimensions are binary. The problems will be discussed from the perspective of an 'agent', taken broadly. That agent could be a human, squirrel, LLM with write access, Searle's Chinese room or a Kafkaesque bureaucracy.

For most of the classical RL problems these 9 dimensions I need to get something out of the way, that is what these dimensions are NOT.

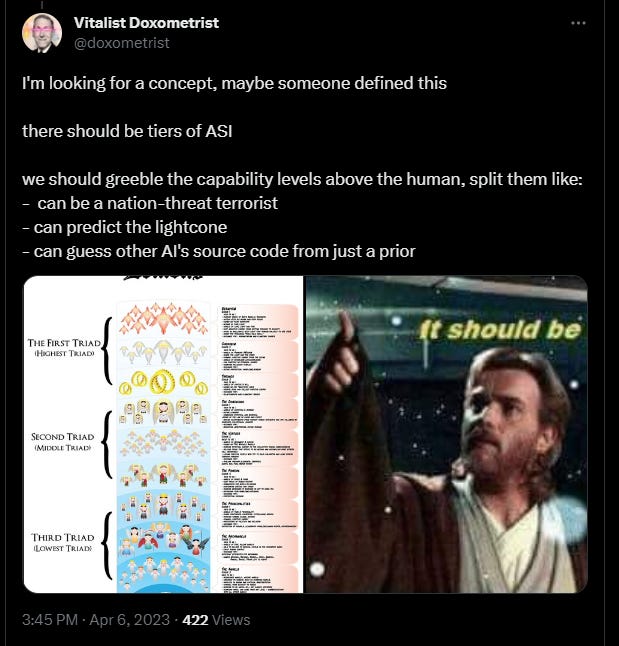

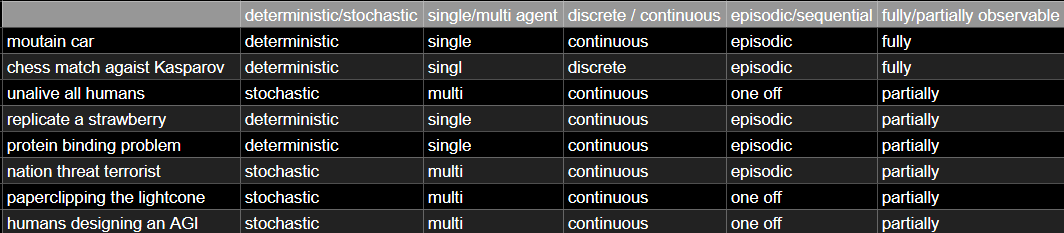

Classic RL dimensions

There are the classic Reinforcement Learning dimensions.

Action space

discrete or continuous

Environment

deterministic vs stochastic

single agent vs multi-agent

discrete vs continuous

episodic vs sequential

fully observable vs partially observable

Let's take some popular problems and apply these categories:

If these look unfamiliar, I’ll explain in a bit.

Note that these are different from complexity classes (O(n) or O(n log n)). It is a useful and basic distinction to reality, but too narrow for our purposes.

Let's examine different, more practical problems to get more of these distinctions.

What is reality for most agents here? That differs depending on society. The reality encountered by a newborn AGI would be stochastic, multi-agent, continuous, partially observable. The episodic-vs-sequential is perhaps the most important feature here, but the discussion of it in RL context is quite limited in scope.

We'll discuss all these cases under a new set of dimensions

The 9 dimensions in 3 sections

Here I propose a set of 9 characteristics that go beyond the classical RL dimensions. These are divided into three sections. The first section is those dimensions that are visible on the first action of the agent attempting it. Things you know about the problem just by examining it before. The second type is those that appear shortly into solving it. The third type are for situations where the problem is deep, existential to your Being and with long-term run and consequences. The distinctions are a bit fuzzy, but should be clear enough at this point. In principle the sorting into 3 groups is not necessary, but it's easier to remember and reason about.

I'll go through each and provide some examples to make it crystal clear. Some are intuitive, but some not quite.

Initial action

Let's imagine we see a new problem, and we here means - a human, a bureaucracy made of humans, or an AI.

Right on first try (or the couple of first tries)

Practice of death (to an extent).

Defense when attacked with a knife in the street - if you had practice before it's different than trying to think on your feet.

The reverse region of the polarity to it is the antifragile problems, where through failure you actually learn more.

Transference potential

This section is basically just 'applicability of transfer learning' .

Suppose you play with friends every week. One week you play with Bob, the other with Alice. You notice they have different playstyles. At the start of the year you were losing to both in 60% of games, by June your win rate against Bob increased to 60% but against Alice remained the same. In a certain sense these are two different games.

That resembles the difference between science and engineering. Science or general chess knowledge is general while engineering is more specific, and engineering challenge for a specific plane or bridge is analogous to studying to 'solve' a particular opponent.

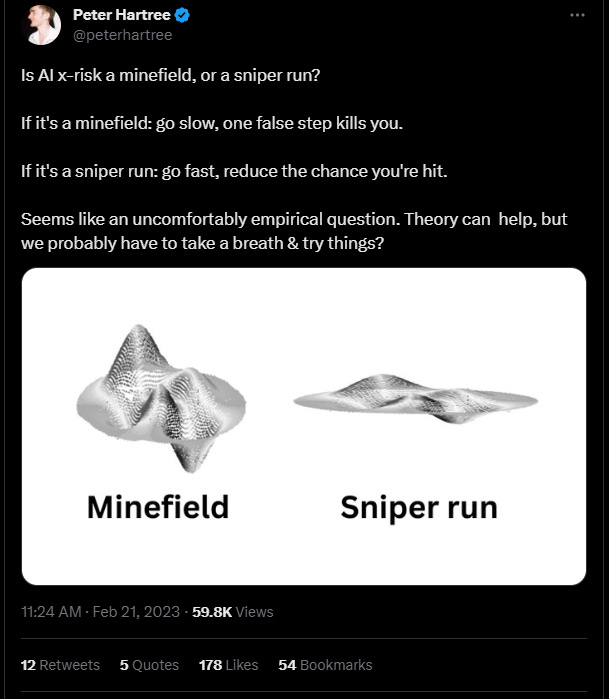

Speed vs risk

https://twitter.com/peterhartree/status/1627992713894281217

This got me thinking, and out loud it goes like this: The minefield vs sniper run distinction is about not finding 1 good solution out of many but avoiding worst case scenarios. That sounds like a dimension where maxi-ok or maxi-min is the optimal strategy for timeless payoff. The speed issue needs to be factored in. In both you choose a trajectory in 4d space where speed is already included.

Traversal of the space in the Bostromian sensewhere the dimensions are speed and risk, and position. That is a 3d diagram. Let's put position (X) and speed (Y) on XY plane and risk - the value axis on Z. You want to reach some final destination with positive payoff, and then we see the difference. For sniper run the risk is higher at lower speeds, but uniform. It is lower and also nearly uniform at higher speeds. Here if we reduce position to a point we see a triangle of speed being reverse proportional to risk.

For a minefield the speed is a factor, arguably higher speed means less time for error checking. Here speed is directly proportional to risk.

This seems reducible to a dimension of speed-risk correlation for a given problem.

Cycles in the short term

Ok, now we're getting started with the problem. We tried some moves that appear moderately effective and to some extent our strategies honed through life before applying here. It is a multi-step problem, though and now we'll encounter different issues.

Searchability of action space

Now this is not necessarily size. Size does not contain actualized search cost and prediction compute cost.

Chess is easy to search, as the rules stay the same throughout the game and the full board is visible. The space is smaller than in Go but it's at least discrete and in theory any player can come up with any move. A different problem is the structure of career trajectory. Initial choice of career, and specific jobs that you take and directions of skills are full of uncertainty. Some opportunities might never appear on your board of actions to take. You might go to conferences but can only attend some of them and need to choose between them. There are more differences than that, but that'll be seen in upcoming dimensions.

Feedback frequency

This is a very intuitive dimension.

It tells you how often you get information where you are. The missile knows where it is. This is a direct extension to the episodic-vs-sequential.

If you're fighting an interstellar war without faster-than-light travel your feedback rate is much lower than a local planetary conflict.

Games can finish through time lapse, reaching an objective. Lesswrong thought usually frames the episodic-vs-sequential tensions for reality as one-shot maximization of time-discounted utility over lightcone. Of course there are arguments, most notably Nick Bostrom's argument about the possible trillions of trillions of future human lives that rejects the time-discounting factor.

See also timeless decision theory.

Step cost

This is also very intuitive. In chess the step cost is small actually, the issue is with choosing which one to do. Each step has the same cost (movement of max 2 pieces).

Let's see a problem where step cost is a bottleneck, while choosing the action is trivial.

Opening a 3 digit lock without the reliance on sound (it's just digital). We can do it many times. What will be the benefit of many attempts? None. If we know it's digits 0-9 there's a certain number of combinations and if the number is truly random there's nothing we can do but try them all. Brute forcing problems is facing the issue of step cost.

Cycles in the long term

Finally we reach the long term, complex problems. Here the integrity and coherence of the subject are put into question, its fundamental relation to the problem itself is examined, and a vast arsenal of methods are at its disposal.

Demonstrability

This is mostly talked about in the context of philosophy of science. In a surprising turn of events in this futuristic discourse we go back to the 17th century and Englishman Thomas Hobbes.

For some reason his views are mentioned in the Wikipedia article on 'antiscience'.

He described his view more in a separate work, but for our purposes here this brief section of the famous ‘Leviathan’ will suffice.

But I'm going to make it even shorter. His idea is that we can only properly call an inquiry 'science' if we design problem instances ourselves. Note that it is quite similar to Hume's call for book burning:

If we take in our hand any volume; of divinity or school metaphysics, for instance; let us ask, Does it contain any abstract reasoning concerning quantity or number? No. Does it contain any experimental reasoning concerning matters of fact and existence? No. Commit it then to the flames: for it can contain nothing but sophistry and illusion. ― David Hume

Therefore he says that experimental, consequence-related sciences are superior to memory based ones. For him anti-scientific would be modern disciplines of: history, paleontology, population genetics, Marxism. See also Baconian science . Creationists really like these approaches for obvious reasons.

Now let's generalize beyond mere science and into everyday life. Science has some problems, about which there is quite intuitively formed some intuitive hypothesis, then theory-crafting, finally experiment and falsification or verification pending new evidence. At least according to Popper. Can all problems be given such a treatment? Hypothesis and theory come around easily, the problem is with testing in many cases.

This is another way to get the distinction - whether you can manipulate it. What about gravitational waves? The LIGO experiment doesn't produce gravitational phenomena, merely measures them. The prediction then consists in instrumental value measured at some point in time. That point is usually relative to some other observable event, such as the merger of black holes and electromagnetically visible traces of that.

Insideness

The idea behind this is primarily inspired by thinking about Balaji Srinivasan's "prime number maze". Basically you can teach a rat to solve a maze by turning every third turn. But if instead of 'all 3s', you give the rodent primary numbers, it gets lost.

Srinivasan says:

We are just like rats in a maze. If most humans were dropped in a prime number maze, they would probably not figure out how to determine the correct path, the turning rule (even if this rule is simple.)

This obviously has to do with path-dependence. Path dependence by definition means that there is some process, possibly cyclical on a timescale larger than your immediate one. Now there are many contingent situations in life that one does not really mind. But what if they are problems? Then it's more tricky.

Let's think through some examples.

Historical process is an obvious one, with many tragedies of people caught into the turning gears of History.

On a micro scale we see this in RTS(Real Time Strategy) games. You need to think in-time - your decision time is limited. That is quite hard. You can memorize the build order and keep effective habits, but you cannot compute every decision from first principles. People talk about 'game sense' as a ready set of heuristics, as you have no time to extrapolate explicit calculations.

far into the future. AI chess engines have similar environments.

Insideness in a sense similar to searchability of action space, but is stronger.

If you are on the inside of the problem, searching through the action space competes for resources - such as attention - with doing the actual moves! What is more, you cannot predict the state space of the environment through Markov simulation, you need to extrapolate from global trends. These trends are often beyond your power and you cannot influence them.

A more limited example is Rubic's cube. The fastest solvers in the world see the cube in its randomness, think about the state space and conjure a sequence of moves in their head. Then they drop it without changing anything.

Next they start the actual timed step - putting the cube into order. That involves recall of the sequence of moves and execution through nimble finger use. (ok sometimes toes are used too).

Now you're probably thinking

That's just like the demonstrability that was described a minute ago! I was thinking through this heavily. I asked GPT4 for help and this is the result, a 2x2 matrix of different problems. It demonstrates that these 2 dimensions are truly different.

Edge case - inside the problem vs demonstrability

A. Inside the Problem + Demonstrable: An engineer is tasked with building a bridge. She's 'inside' the problem, meaning she is immersed in it and must work through it. The problem is 'demonstrable' in Hobbes' sense because the engineer constructs the solution (the bridge) herself.

B. Outside the Problem + Demonstrable: A mathematician is trying to solve a complex equation created by a colleague. He's 'outside' the problem, meaning he didn't create it, but he's tasked with finding the solution. The problem is 'demonstrable' because he understands the mathematical principles that underpin the equation and is therefore able to solve it.

C. Inside the Problem + Indemonstrable: A doctor is trying to treat a novel viral disease. She's 'inside' the problem as she's directly involved and tasked with finding a solution, but the problem is 'indemonstrable' because the disease is a natural occurrence, and our understanding of its precise causes and potential treatments is limited.

D. Outside the Problem + Indemonstrable: A climate scientist studying global warming. He's 'outside' the problem, in that he did not cause global warming, but he's studying it. However, it's 'indemonstrable' because the causes and effects are complex, multifaceted, and not fully understood - they can't be solely constructed by human understanding or intervention.

This matrix illustrates that the nature of a problem, our relationship to it, and our ability to solve it can greatly vary, offering a diverse array of scenarios. The interplay between the theories of Srinivasan and Hobbes provide a useful lens through which to examine these scenarios.

Payoff clarity

The most complex is left to the end. This is quite a complex one, but necessary too. Payoff clarity is not about the distribution of effects of the good/bad solutions. Solving the problem will change the environment and to the extent that you are dependent on the environment it will change you too.

Solving some problems might lead you into a situation where you'd prefer you hadn't solved it and went somewhere else. It is about approximating the future value function. There is something perverse and mystical about this, self-transformation. Do you want it badly enough? How will you be changed on the axes beside the main one which causes you to consider this?

An obvious example is the modern practice of gender transition, where the social face (social transition) and physical (medical transition) change the agent, often irreversibly. There changes to physiology and emotion processing, for instance from estrogen or from different perceptions by others in society.

These cannot be predicted fully due to individual social circumstances and physiological differences. An individual can have some vision of life post-change, but the full picture and competence towards achieving happiness are delegated to the future version of the individual.

For older examples on an individual level see Pascal's wager or Kierkegaardian Leap of Faith. Now we also need to examine the collective level. Nations and corporations make choices weighing the wellbeing of the present against future constituents. Lower the retirement age or make natalist policies? The nature of the timescale of the problem and the inevitable change in the decision maker itself are quite clear.

For the final and the most dramatic example, tying the most to the AGI is the struggle between Cosmists and Terrans in Hugo de Garis' 'Artilect War', a book with quite a long full title.

The Artilect War: Cosmists vs. Terrans: A Bitter Controversy Concerning Whether Humanity Should Build Godlike Massively Intelligent Machines

There takes place a discussion towards anthropocentric Terrans, wanting to preserve the current shape of the human, and avoid the 'no humans in the future' scenario. Cosmists on the other hand see a teleology in the Universe and want to partake in the ascension towards higher, transhuman forms, or even allow AI to eradicate humans. Here the agent is the civilization and this choice is one we very much are experiencing.

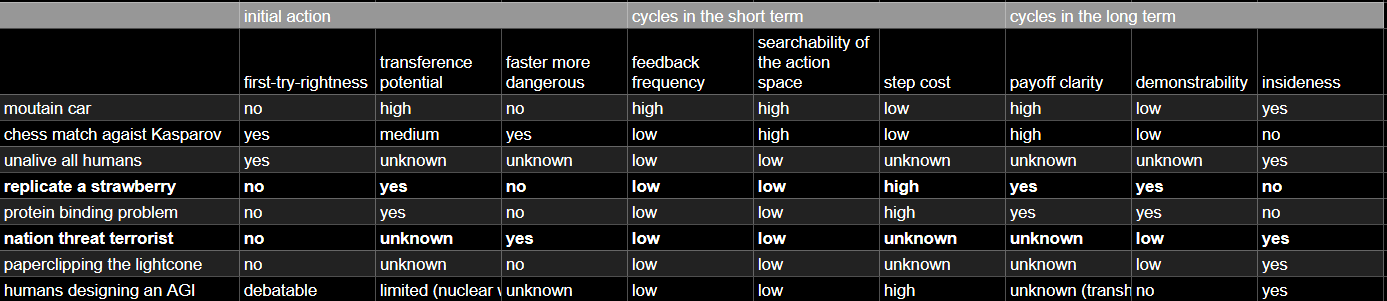

Application of the 9 dimensions to the problems

Test problems

The exact labels might be disputed.

AI related dimensions

The exact labels might be disputed, especially as I lack domain knowledge in fields like chess or fruit.

I won't get into too much detail here. One point that needs to be raised that the strawberry test row that corresponds to the STEM AI, discussed on alignmentforum.org is quite different from the threat models of 'AI that is a terrorist threatening nations' or 'paperclipping the lightcone'.

Further research

Maxipok rule as derived by Bostrom might be another dimension to the problem that is not covered here.

The way I'd see this work is that assuming a posteriori distribution of action sequences approaching an infinite number of run paths bundle into a big moderate payoff mass of distribution, while other problems with bimodal distribution between ok and great would be encouraging risk taking much more.

Conclusion

Let's recall the 9 dimensions for reference.

Transference potential

First-try-rightness

Speed vs risk

Step cost

Feedback frequency

Searchability of the action space

Payoff clarity

Outside-inside (Markov)

Demonstrability

Formatization of the AGI - related discourse will be greatly aided by this outline. Treat this as a start of discussion, probably some dimensions are missing. The likelihood that the dimensions here could be reduced to a smaller number is less likely, and 9 is a nice number.

I expect specific failure modes of AGI to be different across these dimensions, as outlined in the last of the tables above.

Coming up next - summary of what pagan mindset is analytically in general, ‘in the wild’, in preparation to alchemic isolation of the pagan/acc memeplex. It’s already there just needs naming.

Links to other parts:

rats and eaccs 1

1.1 https://doxometrist.substack.com/p/tpot-hermeticism-or-a-pagan-guide

1.2 https://doxometrist.substack.com/p/scenarios-of-the-near-future

making it 22.1 https://doxometrist.substack.com/p/tech-stack-for-anarchist-ai

2.2 https://doxometrist.substack.com/p/hiding-agi-from-the-regime

2.3 https://doxometrist.substack.com/p/the-unholy-seduction-of-open-source

2.4 https://doxometrist.substack.com/p/making-anarchist-llm

AI POV 3

3.1 https://doxometrist.substack.com/p/part-51-human-desires-why-cev-coherent

3.2 https://doxometrist.substack.com/p/you-wont-believe-these-9-dimensions

4 (techo)animist trends

4.1 https://doxometrist.substack.com/p/riding-the-re-enchantment-wave-animism

4.2 https://doxometrist.substack.com/p/part-7-tpot-is-technoanimist

5 pagan/acc https://doxometrist.substack.com/p/pagan/acc-manifesto