Take a look outside your window. Are they there? Who? The robots, marching incessantly?

When did you have your blood checked last time? Did they detect the nanobots?

Are you reading this in a bunker trying to survive a supervirus plague that originated in unknown circumstances?

If you answered 'no' to all of these, it means there's still time.

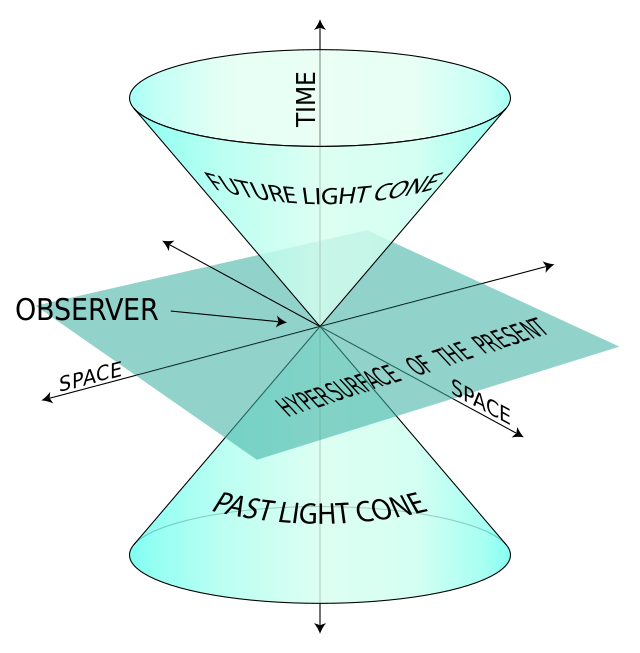

I write in March 2023, and you exist necessarily in the future relative to me - in The Lightcone.

What is AI doom about (summary)

It’s like the Y2K scare, 2012 Mayan apocalypse or other from the long list of apocalyptic prediction. This time the distribution of IQ among the scaremongers is different. Self proclaimed Rationalists roam the memetic landscape from their LessWrong headquarters, preparing for the End. A Neo-Bohemian-decadent, tech-adjacent cult with a charismatic leader with enigmatic name Eliezer and the best selling Harry Potter fanfic.

Their story is a long one, the peace that lasted until the postrats (post-rationalists) decoupled themselves from the rats, the achievements and downfalls and great feasts. Many strange and wonderful characters, and nightmare fuel stories including getting blackmailed by time traveling lizards. Some legends even mention orgies...

Enough about the rats. What is the apocalypse they are waiting for?

The AGI, or Artificial General Intelligence, or more specifically ASI - Artificial SuperIntelligence. That thing is a Frankenstein, or sorcerer's apprentice scenario where a machine rebels against its masters. All who do not yield to machine's will get turned into paperclips. They call that Misaligned AGI. Rats' Messiah would be coming of Friendly Artificial General Intelligence. An old trope, I know - but that's what we're dealing with in 2023. I'll skip the modern reincarnations of Pygmalion until later. Now you'll ask how do the rats prepare for the AGI-pocalypse?

They try to save everyone! Have been since 1998 in fact!

Like in the Matrix movie, they've been trying to wake up the young and bright to the looming threat. How is it going for them? Cult leader said all efforts are dubious, the best thing to do is to die with dignity. That post was followed by another one, listing all the reasons why saving the world won't happen. That is Eliezer's AGI ruin post, from June 2022. I will refer back to it often - any numbered references within this blog series are from that post, unless indicated otherwise. 43 reasons in fact. He does not judge his cult to be doing its task well:

38. It does not appear to me that the field of 'AI safety' is currently being remotely productive on tackling its enormous lethal problems.

Many do not listen to the doomsayers. They think AGI won't happen at all. Some consider it to be far in the future. A vocal minority wants to speed up the AGI creation process. They trust in the Universe that AGI can't be hostile. They call themselves effective accelerationists (e/acc), and here is their manifesto.

There are nice people in both groups, yet that is not all.

Wait but what is the title even doing here?

TPOT hermeticism??? Pagan guide to saving the Lightcone?

What does it even mean? (Or "wat means"? to use a popular phrase).

I mentioned Postrats as a splinter group from rats. Their native habitat is TPOT - 'this part of twitter', that is when they are not in an unplugged meditation retreat inna woods.

TPOT talks about things such as memetic warfare, tulpas and egregores. It is a centre of research on these topics.

They are quite an esoteric bunch, more diverse and open minded than the rats. Unlike the rats, they are humble and cozy.

Many of the e/acc are from that background. That is where I, Doxometrist, come from.

And TPOT only tangentially occupies itself with AGI.

Hermeticism is a field of knowledge relating to Hermes. Usually that refers specificially to an ancient Egyptian scholar of the esoterica, Hermes Trismegistus (Thrice-Great). I'll explain more later.

Paganism does not need explanation. Lightcone you already know, and the dangers to the Lightcone it needs savings from are many. Misaligned AGI is one of the most important ones right now, but not only that is a threat.

How do these all fit together?

I believe TPOT has the right approach to many things, an approach that works for the people who practice it.

I believe TPOT's way of thinking deserves more exposure.

I believe TPOT as a scene is a good candidate to save us from many ills.

There are hidden jewels of thought there that need refiling, polishing and putting on a pedestal.

Two such things are being able to imagine a positive future to AI and pagan-ish mindset.

Why non-doom scenarios? Optimism comes from the e/acc people - who are part of TPOT. They justify their extreme optimism using Qabbalistic thermodynamic reasoning and a variant of Pascal's wager.

The latter means that if AI doom happens it won't matter whether we were optimistic or not, but if we believe and act with confidence, that maximizes the chances of ending up in a favorable scenario.

Why TPOT? Mainstream discussions don't cover the risk from AI at enough detail level. The other extreme is LessWrong ( the Rats).

LessWrongers get too deep. Mathy stuff - which is needed - is mixed with a pop approach. LW is very tied to academia and theory.

E/acc goes off on the other end, and is judged for 'vibes based reasoning'.

Where to go from there?

Series goals

I am starting a blog post series that follows the middle way between the extreme optimism of e/acc and doomerism of old school rats. On my way there I try to uncover the best parts of TPOT and put them together into something that can protect us from misaligned AGI and other undesired futures.

The alignment problem will be considered nearly from first principles. No reading of the Sequences, or dozens of LW posts on the topic required.

About the pagan bit - my approach / investigative style is postmodern. I will not attempt exegesis of ancient texts for the basics. That shall come in time. Developing a modern understanding and only later comparing with the ancients. These should be reconcilable - it's not like they must have gotten everything right, and they disagreed among themselves too.

That is quite a standard mindset for TPOT.

Series overview

Note: not all parts might be in one post, some could be split.

Series overview - some posts might be in parts, more manageable

This post, setting introduction and boundary conditions

Outline of specific future scenarios. We need more high-resolution pictures than just heaven, business as usual, or paperclips.

Weaknesses of AGI

Outline of a decentralized AI - a fairly technical post.

Case for panpsychism - is starts getting esoteric

Case for polytheism as already being a de facto state of affairs in TPOT - this will go back to many themes from this post

Pagan/acc - tenets and agenda - the final synthesis and outline of what to do next.

Ground truth

I mentioned 'nearly' from first principles. It would be too much effort to reinvent the wheel, so I bootstrap a bit from the previous body of Eliezer's work on the topic.

There are 4 points I import from that library and expect them to be fairly uncontroversial.

Things that we need to acknowledge are these. Discussion of these should be limited to this post. I will delete all comments that are under the later posts that argue against this part.

Criticisms of intelligence explosion

Many people have no idea what they're talking about and give very bad points. All the points saying rapidly self improving AIs are impossible are here

Potential of artificial systems is huge

In 2017 Eliezer wrote a critique of a certain AI skeptic. Two points to take out from there. Possibility of rapid self-improvement in a narrow domain to superhuman levels has been conclusively demonstrated.

AlphaGo Zero taught itself to play at a vastly human-superior level, in three days, by self-play, from scratch, using a much simpler architecture with no ‘instinct’ in the form of precomputed features.

There are important systems in our world that an AGI could game and heavily disrupt our civilization, even without visible genocidal intentions. Solely the speed advantage gives it an important edge.

It can’t eat the Internet? It can’t eat the stock market? It can’t crack the protein folding problem and deploy arbitrary biological systems? It can’t get anything done by thinking a million times faster than we do?

General case is hopeless

I don't consider an RL(Reinforcement Learning) algorithm trained on eons of data to be alignable. Many values only make sense in a narrow domain of power level. Ethics of a logistic company executive are different from a medieval peasant trading at a market, or a second century warlord. They deal with different scales of power, even if the problems are similar.

Eliezer argues a similar point in "AGI ruin".

[from 30] million training runs of failing to build nanotechnology. There is no pivotal act this weak; there's no known case where you can entrain a safe level of ability on a safe environment where you can cheaply do millions of runs, and deploy that capability to save the world and prevent the next AGI project up from destroying the world two years later.

[from 19] there is no known way to use the paradigm of loss functions, sensory inputs, and/or reward inputs, to optimize anything within a cognitive system to point at particular things within the environment - to point to latent events and objects and properties in the environment, rather than relatively shallow functions of the sense data and reward.

To elaborate, that is true also for humans. State aligns humans, there's no doubt about that. There are action space regions of stealing, murdering that are frowned upon by the state. Efficient states impose their value function onto individuals and tie latent events (act of robbery) to shallow functions of sense data and reward.

Total physical control. It's been like that since the pharaohs.

Supervision - speed of optimization tradeoff

Eliezer says in "AGI ruin" that systems with human feedback or supervision will have a performance penalty relative to free roaming ones. That is a tradeoff we need to work with.

Conclusion

Now I need you to do two things. First thing is comment 2 numbers - % chance of AGI by 2030 and % chance of humanity doom before 2050. I will collate these numbers into a statistic and prediction.

The second thing is to subscribe to this substack. It is free and you don't want to miss out, are you?

THE FATE OF THE LIGHTCONE IS IN YOUR HANDS, ANON

Links to other parts:

rats and eaccs 1

1.1 https://doxometrist.substack.com/p/tpot-hermeticism-or-a-pagan-guide

1.2 https://doxometrist.substack.com/p/scenarios-of-the-near-future

making it 22.1 https://doxometrist.substack.com/p/tech-stack-for-anarchist-ai

2.2 https://doxometrist.substack.com/p/hiding-agi-from-the-regime

2.3 https://doxometrist.substack.com/p/the-unholy-seduction-of-open-source

2.4 https://doxometrist.substack.com/p/making-anarchist-llm

AI POV 3

3.1 https://doxometrist.substack.com/p/part-51-human-desires-why-cev-coherent

3.2 https://doxometrist.substack.com/p/you-wont-believe-these-9-dimensions

4 (techo)animist trends

4.1 https://doxometrist.substack.com/p/riding-the-re-enchantment-wave-animism

4.2 https://doxometrist.substack.com/p/part-7-tpot-is-technoanimist

5 pagan/acc https://doxometrist.substack.com/p/pagan/acc-manifesto

100%

~0% Also with a lot of weasely qualifiers etc.

1. 100%

2. 100% (with a lot of weasely qualifiers, such as “in its current form”, but nevertheless….)

I don’t view either of these as a negative development, btw.